Once again, we consider solving the equation  , where

, where  is nonsingular. When we use a factorization method for dense matrices, such as LU, Cholesky, SVD, and QR, we make

is nonsingular. When we use a factorization method for dense matrices, such as LU, Cholesky, SVD, and QR, we make  operations and store

operations and store  matrix entries. As a result, it’s hard to accurately model a real-life problem by adding more variables.

matrix entries. As a result, it’s hard to accurately model a real-life problem by adding more variables.

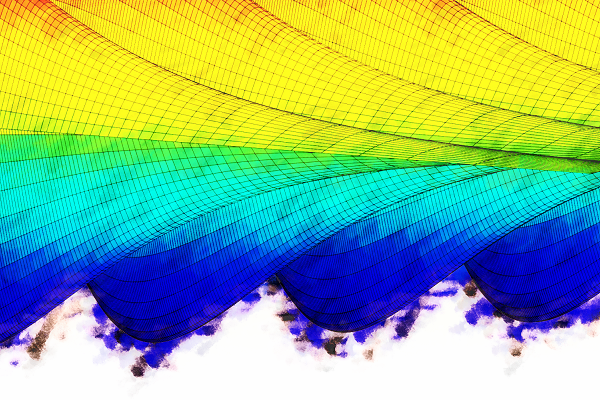

Luckily, when the matrix is sparse (e.g. because we used finite difference method or finite element method), we can use an iterative method to approximate the solution efficiently. Typically, an iterative method incurs  operations, if not fewer, and requires

operations, if not fewer, and requires  storage.

storage.

Over the next few posts, we will look at 5 iterative methods:

- Jacobi

- Gauss-Seidel

- Successive Over-Relaxation (SOR)

- Symmetric SOR (SSOR)

- Conjugate Gradient (CG).

In addition, we will solve 2D Poisson’s equation using these methods. We will compare the approximate solutions to the exact to illustrate the accuracy of each method.

Continue reading “Iterative Methods: Part 1”

. We assume that

is nonsingular, and define the

-th Krylov subspace as follows:

.

is symmetric, positive definite (SPD), i.e.

You must be logged in to post a comment.