Let’s look at one more way to solve the equation . We assume that

is nonsingular, and define the

-th Krylov subspace as follows:

.

Krylov subspace methods are efficient and popular iterative methods for solving large, sparse linear systems. When is symmetric, positive definite (SPD), i.e.

we can use a Krylov subspace method called Conjugate Gradient (CG).

Today, let’s find out how CG works and use it to solve 2D Poisson’s equation.

4. Conjugate Gradient

a. Creating iterates

Let be an inner product for

, and

the norm associated with the inner product.

How Krylov subspace methods work is simple. We will approximate the exact solution with a sequence of iterates

that meet two conditions:

In other words, when measured in the norm ,

is the best approximation to

from the

-th Krylov subspace.

(For initialization, we will take and

.)

The optimality condition above is the same as the orthogonality condition under the inner product

. Therefore,

satisfies the equation,

.

Lemma 1.

The orthogonality condition and the fact that

imply two things. At every iteration

,

Proof.

First, note that the vector

represents the decrease in error over 1 iteration.

Since and

, we know that

.

Furthermore, for any ,

■

b. Creating residuals

Next, we select a vector that meets these conditions:

(For initialization, we will take .)

The vector also satisfied these conditions, so the two are linearly dependent. There is some constant

such that

.

We now have a way to compute the best approximation from the previous one:

.

This holds, provided we have a search direction .

Lemma 2.

The coefficient

is given by,

,

where

is the residual.

Proof.

We have the equation,

.

On each side, take the inner product with :

.

Since and

, the LHS is equal to 0. Hence,

.

Note, .

■

Lemma 3.

The residuals are related in the following manner:

.

Proof.

Apply to both sides of the equation:

.

■

c. Creating search directions

We will now construct the search direction so that we can continue the iteration. Along the way, we required the search directions to satisfy,

.

Thus, if also meets these conditions, namely,

,

then will end up forming an orthogonal basis for

.

We can create such a sequence using Gram-Schmidt method! Specifically, will be the sum of a vector in

(which can be written as a linear combination of

) and an offshoot

:

.

Then, we orthogonalize against

.

Lemma 4.

In the

-th iteration, the coefficients

are given by,

.

Proof.

Given the equation,

,

we can take the inner product with :

.

Since and

, the LHS is equal to 0. Furthermore,

forms an orthogonal basis, so the summation on the RHS reduces to a single term:

.

We can now solve for :

.

■

Isn’t it cool to work backwards and see the motivations? We just need to figure out how to choose .

d. SPD

So far, we have not used the fact that is SPD. We do so now to obtain CG.

Lemma 5.

If

is SPD, then

defines an inner product for

. Then,

gives us a norm, called the

-norm.

Proof.

Let’s show that, for all vectors and scalars

, the

-inner product is symmetric, bilinear, and positive-definite:

Symmetric:

Notice how we used symmetry of the Euclidean inner product.

Bilinear:

Since the -inner product is symmetric, we also have linearity in the second argument.

Positive-definite:

Because is positive definite,

.

The -inner product is equal to zero only when

.

■

The key thing to realize is that Sections 4a – 4c are valid for any nonsingular matrix . We can create a Krylov subspace method even if

is not positive definite, or even symmetric. We just need to define an inner product and select

appropriately.

Let’s summarize what we learned so far using the -inner product.

We want to arrive at the solution by a sequence of best approximations

. Best approximations satisfy the orthogonality condition:

.

By sheer luck (or well-planning), the residual is orthogonal to the Krylov subspace

in the Euclidean inner product:

.

In particular, this means the residuals are orthogonal to each other. Hence the name Conjugate Gradient (“orthogonal residuals” didn’t sound good).

Given a best approximation , we can find the next one

:

,

where,

.

We can also find the residual:

.

Notice that appeared again. It’d be nice to implement a sparse matrix-vector multiplication and store this result.

To continue the iteration, we decide on a search direction for some :

,

where,

.

e. Creating offshoots

There are a few candidates for . We will select the residual,

,

so that all but one of the coefficients will be 0!

Lemma 6.

When

,

.

Proof.

First, let’s check that .

We know that the residual resides in

, because

(by definition of the Krylov subspace) and

implies

.

Moreover, is orthogonal to

in the Euclidean inner product, so

. By the same token,

■

Lemma 7.

When

, we can simplify the formulas for

,

, and

:

Proof.

The formula for comes from Lemma 6.

From Section 4d, we know that,

.

By orthogonality, the numerator of simplifies to:

As for , note that,

.

■

f. Summary

The pseudocode for CG looks like this:

% Initialize

x0 = zeros(n, 1), r0 = b, p1 = b;

for k = 1 : numIterations

% Sparse matrix-vector multiply

Ap1 = A * p1;

% Find alpha

alpha1 = (r0' * r0) / (p1' * Ap1);

% Find the iterate

x1 = x0 + alpha1 * p1;

% Find the residual

r1 = r0 - alpha1 * Ap1;

% Find gamma

gamma1 = (r1' * r1) / (r0' * r0);

% Find the search direction

p2 = r1 + gamma1 * p1;

% Update the iterates

x0 = x1;

r0 = r1;

p1 = p2;

end

Notice how we only need a sparse matrix-vector multiply, a vector-scalar multiply (axpy), and a dot product to solve a matrix equation. This is the power of iterative methods. We can make our code more efficient by reusing variables:

% Initialize

x = zeros(n, 1), r = b, p = b;

r_dot = r' * r;

for k = 1 : numIterations

% Sparse matrix-vector multiply

Ap = A * p;

% Find alpha and initialize gamma

alpha = r_dot / (p' * Ap);

gamma = r_dot;

% Find the iterate

x = x + alpha * p;

% Find the residual

r = r - alpha * Ap;

r_dot = r' * r;

% Find gamma

gamma = r_dot / gamma;

% Find the search direction

p = r + gamma * p;

end

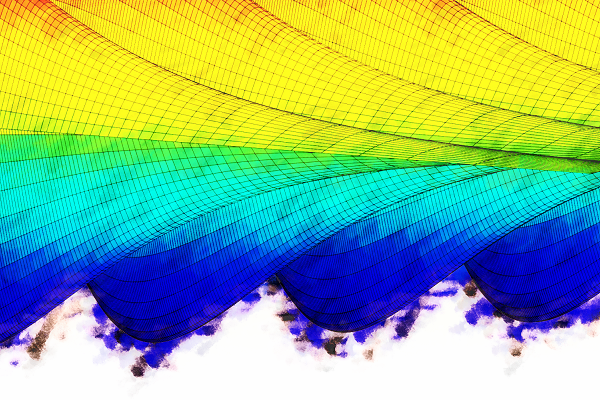

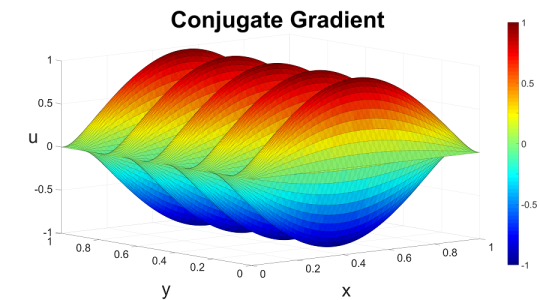

5. Example

Let’s go back to 2D Poisson’s equation on a unit square domain, with homogeneous Dirichlet BC. Our RHS function is given by,

,

and we choose ,

, and

as before.

Stunningly, CG converges in 1 iteration! As far as iterative methods go, you can’t do better than that.

The question is how? The RHS function that I chose makes the Poisson’s equation an eigenfunction problem. Even at the discrete level, the RHS vector happens to be an eigenvector of

:

.

As a result, in the first iteration,

Talk about luck (or well-planning)!

Notes

A big thanks goes to Dr. Ye, one of my teachers at Kentucky. He instilled my passion for numerical linear algebra and applications thereof. To learn additional iterative methods, I recommend reading his paper linked below.

You can find the code in its entirety here:

References

[1] Isaac Lee, lecture notes (2011).

[2] Qiang Ye, Deriving Optimal Krylov Subspace Methods.

[3] Qiang Ye, lecture notes (2009).